There are major differences in the ways that AI and the human brain work. Instead of wringing our hands about the coming AI threat, we should be thinking of ways to ally with new technologies.

For clues about our cultural attitudes towards robots and artificial intelligence (AI), we need only turn to Star Wars. In one of the most famous lines in the series’ Return of the Jedi installment, the squid-faced Admiral Ackbar exclaims, “It’s a trap,” as he discovers that the rebel fleet assigned to attack the new Death Star had fallen straight into the hands of Imperial fighters. This classic line also sums up the way that many businesses and media outlets have spoken about the newest forms of artificial intelligence.

Companies have been providing nonstop reminders that robots are taking over an increasing number of jobs, leading us to ever larger-scale unemployment. The recent opening of Amazon Go has taken this issue to new heights. Through Amazon Go, customers can just pick up the items they want to buy and walk out, and a receipt pops up on their phone. In a stroke, cashiers are no longer required.

Recent studies on AI-led unemployment make depressing reading. A key report points out that some 47% of US jobs will be automated in the near future. Another study suggests that 45% of the daily tasks currently done by humans could be automated if current trends continue. In our recent book, Understanding How the Future Unfolds: Using Drive to Harness the Power of Today’s Megatrends, we mentioned the case of a chief financial officer at an investment bank. He was given the task of reducing the size of his staff by 80%, as off-the-shelf digital technologies could be doing the jobs occupied by humans. From this vantage point, it seems that, in the near future, human beings will become the horses of the past.

The bad news does not seem to stop here though: it isn’t just the job markets that are under threat; to some, it seems that mankind itself is besieged by intelligent machines. Elon Musk called the prospect of AI “our greatest existential threat”, and Bill Gates echoes this sentiment. The late Professor Stephen Hawking, meanwhile, said that creating AI is the biggest event in human history – and also the last.

AI’s true colours

We don’t presume to be qualified to dismiss the opinions of these great thinkers. But what we can do is highlight the reality of AI right now. Despite the doomsday views, the reality is that the current capability of AI is actually so limited that the threat of a machine-dominated world remains a concept that belongs to a galaxy far, far away.

Despite the endless media coverage on how clever computers are, it is important to understand that there isn’t really that much intelligence in AI. Intelligence refers to one’s capacity for logic, understanding, self-awareness, learning, emotional knowledge, planning, creativity and problem-solving. Yet, at the moment, machines can do very few of these things. Intelligence implies the ability to think. But AI does not think.

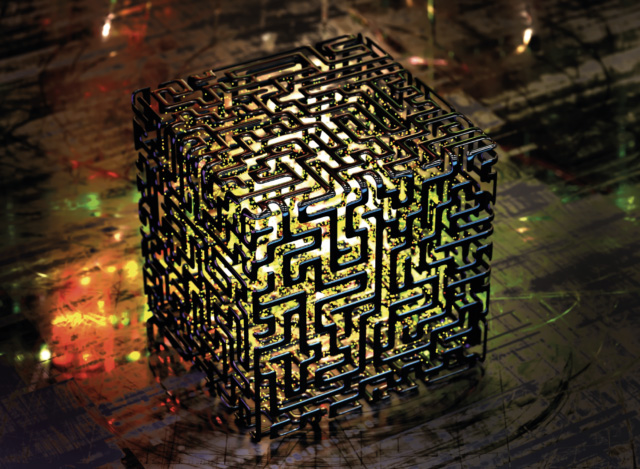

The true ability of AI lies in the way that it processes a huge amount of data sources and then, based on this, makes the right guesses in terms of decision output. Over time, the more the machines perform the same task, the more they can adjust their information processing and, consequently, the better they become at guessing. This is pretty much how machines ‘learn’.

One may argue that machines these days can read text, see images and hear natural speech with unprecedented levels of accuracy. For instance, at our AI solutions company Nexus Frontier Tech, our intelligent scanner can already achieve 99% accuracy in reading texts, whether they are typed or handwritten, and whether they are presented in digital or physical form. This makes machines look very capable and smart. Yet, under the hood, the mechanism is not very different from how our brain works (hence the fact that AI development right now is mostly based on artificial neural networks).

Our brains work like this: we draw various data inputs from multiple sources and then consider whether or not this received information is valuable to our decision, before we process it to generate an output (an opinion/view/decision). In a similar fashion, machines take the input data from different places, organize it, assign a weight to the input, do the calculations and then churn out possible answers.

We can see, then, that machines do not and cannot provide definitive answers. In fact, they do not even ‘know’ the answers at all, given that all they are doing is weighing information and ideas from different sources and then providing the statically most probable right answer. A mistake made by the famous AI computer IBM Watson in the US game show Jeopardy! speaks volumes about this. At the end of the first day of the competition, the category for ‘Final Jeopardy’ was ‘US Cities’, and the clue was: “Its largest airport was named for a World War II hero; its second largest, for a World War II battle.” The answer is, ‘What is Chicago (O’Hare and Midway).’ Watson guessed, “What is Toronto?????” Wrong city. Wrong country.

There are many reasons why Watson was confused by this question, including grammatical structure, the presence of a city in Illinois named Toronto and the Toronto Blue Jays playing baseball in the American League. As a result, Watson’s confidence level was incredibly low: 14%… Watson knew that it didn’t know the answer, as indicated by the five question marks…

What makes AI more powerful than ever is the fact that humans can train the machines by feeding them with answers (so-called supervised learning). With this newly ingested information, they can adjust the weighting next time, thereby increasing the accuracy of their responses over time – just as our brains do.

The fact that machine learning is based on improving its abilities to guess the right answers contrasts strongly with previous attempts at creating AI. Scientists once believed that the best path to coming up with machine intelligence was programming. However, this approach failed, ultimately leading to the so-called ‘AI winter’, because there were far too many rules that humans had to teach the machines to enable them to predict answers accurately. It was not until the recent emergence of probabilistic computing (to replace the deterministic approach) that AI received a second – and certainly much better – chance.

What AI can actually do

In reality, AI is therefore rather different to the way many people perceive it. Hence, for executives who are considering employing AI, it is important to recognize that, instead of an almighty means to transform a business with the power to confer a brand new competitive edge, AI is, at the moment, nothing much more than a tool that supports existing business processes. In this sense, in the current business environment at least, it is not as much about AI as IA – ‘intelligent assets’ or ‘assistance’. It just helps us approach business in smarter ways – in particular, dealing with routine and standardized tasks. This is why most companies are only using AI in pockets rather than in full business transformations. And the sporadic deployments come predominantly in the form of smarter ideas to cut costs (e.g. insurance companies being able to locate and analyse pictures of car accident damage to assess the size of claims), or better ways of helping customers (including using speech recognition to replace telephone PIN codes).

In short, the way AI has been shaping businesses is mostly restricted to intelligent automation – enhancing standardized business processes using AI. Like any other sort of digital transformation, the key to success in employing AI technologies is not digital but transformational.

So, for any newly acquired AI technologies to excel, changing the existing processes and workflows, and/or developing new ones, is a must. This, in turn, means that new teams and organizational structures will also have to be introduced. People’s fear of losing their jobs will have to be allayed. Staff will need to be convinced of the value of the new AI technology being implemented. Corporate culture may have to change. The success of machines, therefore, lies squarely in the success of how the human activities surrounding the new technology are organized. What AI can actually do mostly depends on what humans can actually do (see below)

THE CHALLENGES OF LAUNCHING AN AI PROJECT

It was the famous economist Professor Dan Ariely who once said: “Big data is like teenage sex: everyone talks about it, nobody really knows how to do it, everyone thinks everyone else is doing it, so everyone claims they are doing it.” We believe this also rings true for AI. Despite the few technologically avant-garde companies like Google and Facebook in the US, and Tencent and Alibaba in China, the take-up of AI technologies among many other companies remains low. Why?

One reason, in our experience, is unfamiliarity. While blue-sky strategic thinking involving AI is easy, it can be trickier at an operational level. Indeed, working out what the technology can actually bring is often elusive, as it requires clear answers to the following questions:

- What specific functions can various AI products perform?

- What benefits can be created?

- What business issues can AI really tackle?

- What is the exact business objective that AI should be achieving?

- Is the workflow of the process to be automated properly mapped out?

- And, if not, will there be resources allocated to make sure it is?

These are some of the reasons why we always recommend that our clients engage in projects that have narrow and clearly defined business objectives when it comes to AI.

On top of this, the technical aspect of AI can be rather daunting. It poses a somewhat circular argument: without having a good grasp of the technology, it is difficult to figure out what business objectives to reach; and without knowing the specific aims to be achieved, it is not easy to see what kind of AI technologies are needed and how they would work. Stick to small initiatives that address specific single pain points. Do not go overboard with big complex projects.

A bigger challenge, however, is on the human rather than the technology side. In its Current incarnation, AI is nothing more than a tool. And like all business tools, it is only truly effective when the right business processes are built around it. Hence, executives need to be ready to modify existing processes and workflows, and perhaps even build new ones. Moreover, they will have to find and keep the right people – those who can tackle business and technology issues. Inevitably, these executives will have to be able to work with both business and technology managers to ensure the smooth development, implementation and maintenance of the new capabilities.

Last but not least, the need to introduce brand new

technologies and additional (sometimes even disruptive) processes often translates into a significant amount of work; this is so much so that companies sometimes stick to what they know, leading to inertia. Executives who are interested in taking their companies to the next level will therefore have to overcome this temptation.

The winner: alliance

It is interesting to see how Hollywood portrays AI. Back to Star Wars. In the films, AI-driven – and even self-aware – droids like R2-D2 are best at assisting humans to fly X-wings or escape from the Death Star. Even in such a technologically advanced world, machines do not replace humans.

We think the same can be said of our world right now. There is no doubt that automation led by AI and robotics will eliminate many jobs in the future. Routine work, be it white- or blue-collar, can be expected to be taken up by machines. But it is also time to dispel the myth around AI to see what it can actually do as a tool. Only in this way can we really hold honest conversations about how humans and machines can forge a new alliance.

— Terence Tse is a member of Duke CE’s Global Educator Network and co-founder and managing director of Nexus Frontier Tech, specializing in AI solutions, and associate professor at ESCP Europe. Mark Esposito is a member of the teaching faculty at Harvard University’s Division of Continuing Education, and professor of business and economics at Hult International Business School. Danny Goh is a serial entrepreneur, and the partner and commercial director of Nexus Frontier Tech. Hajime Hotta is chief AI scientist at Nexus Frontier Tech.

An adapted version of this article appeared on the Dialogue Review website.