AI should be developed in ways that not only safeguard, but enhance human values.

John McCarthy, a pioneer in computer science, coined the term artificial intelligence (AI) in 1955, setting the stage for the domain’s formal inception at the 1956 Dartmouth Conference. Inspired by Alan Turing’s foundational work on machine intelligence, McCarthy envisioned AI as the science and engineering of making intelligent machines capable of performing tasks requiring human-like intelligence, such as reasoning, learning and autonomous action.

However, although McCarthy’s vision was revolutionary, it largely overlooked a crucial aspect without which all intelligence, including that of systems, is an empty shell devoid of meaning: integrity. As AI becomes more and more intertwined with societal functions, the lack of a comprehensive framework for embedding integrity from the beginning represents a critical oversight.

The main challenge for leaders now is to shape a future where the collaboration between human insight and AI amplifies value exponentially, grounded in human values. This challenge transcends the simplistic debate over human versus AI supremacy. It leads us to focus instead on achieving a synergistic relationship that not only safeguards but enhances fundamental human values through unwavering integrity.

Systems designed and operated with this purpose will embody not just artificial intelligence, but artificial integrity.

Artificial integrity

The concept of artificial integrity emphasizes the development and deployment of AI systems that uphold and reinforce human-centered values, ensuring that AI’s integration into society enhances rather than undermines the human condition. Through a holistic approach that combines external guidelines, internal operational consistency, and a commitment to a synergistic human-AI relationship, artificial integrity aims to ensure that AI systems contribute positively to society, marking a pivotal evolution in the field’s trajectory.

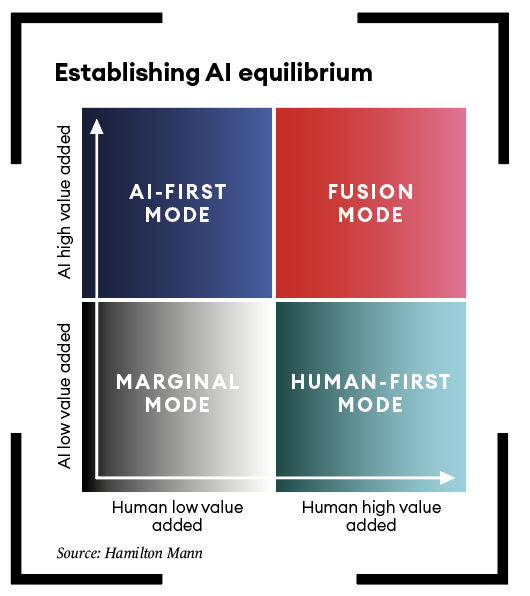

This perspective underscores the importance of carefully assessing AI’s societal impact, particularly how it balances ‘human value added’ and ‘AI value added’, which is among the most delicate and consequential considerations. It is crucial not only to map out the current interplay between human intellect and AI capabilities, but also to envision a future where the synergy between humanity and technology redefines value, labor and knowledge in ways that enhance the greater interest of society, preserving and sustaining its integrity. This equilibrium for artificial integrity can be established by exploring four distinct modes (see graphic).

Marginal mode

When it comes to value creation, there exists a quadrant where the contributions of both human and artificial intelligence are notably restrained, reflecting scenarios of limited impact. This segment captures tasks characterized by their minimal marginal benefits when subjected to either human or artificial intelligence inputs. Such tasks are often too inconsequential to necessitate significant intellectual investment, yet simultaneously too intricate for the present capabilities of AI, rendering them economically unjustifiable for human endeavor.

This domain frequently encompasses foundational activities, where the roles of humans and AI are either embryonic or rudimentary, primarily involving routine and monotonous tasks that scarcely profit from higher-order cognitive or AI-driven enhancements.

Consequently, transformations in this quadrant are typically incremental, signifying a plateau of productivity where neither human nor AI inputs precipitate marked advancements. An example is document scanning for archival purposes: a task that, while manageable by humans, succumbs to monotony and error, and where AI, despite capabilities like optical character recognition (OCR), offers only marginal improvement due to challenges with non-standard inputs.

Marginal mode comprises a sector of tasks that remain fundamentally unaltered in their simplicity and limited in their demand for evolution, and represents scenarios where the deployment of sophisticated AI systems fails to justify the investment.

AI-first mode

In this paradigm AI is the linchpin, spearheading core operational functionalities. It spotlights scenarios where AI’s unparalleled strengths – its ability to rapidly process extensive datasets and deliver scalable solutions – stand out.

This AI-centric approach is particularly relevant in contexts where the speed and precision of AI significantly surpass human capabilities. AI emerges as the driving force in operational efficiency, revolutionizing processes that gain from its superior analytical and autonomous capabilities.

The deployment of AI is strategic in areas where human contribution does not add significant value. AI’s prowess in handling data-intensive tasks far exceeds human performance; it has a notable advantage in domains like big data analytics. The AI-first quadrant is where AI’s capacity to distill insights from voluminous datasets, working at speeds beyond the reach of human analysts, demonstrates critical value.

An example is observed in the financial industry, particularly in high-frequency trading. Here, AI-driven trading systems leverage complex algorithms and massive datasets to identify patterns and execute trades with a velocity and scale unachievable by human traders. It showcases the transformative potential of AI for redefining operational capabilities.

Human-first mode

In this segment, the spotlight shines brightly on the indispensable qualities of human cognition, including intuitive expertise, contextual, situational, emotional and moral discernment. AI is deployed in a supportive or complementary capacity. This approach champions human capabilities and decision-making, particularly in realms necessitating emotional intelligence, nuanced problem-solving, and moral judgment. It emphasizes the irreplaceable depth of human insight, creativity and interpersonal abilities in contexts where the intricacies of human thought and emotional depth are critical, and where

AI’s current capabilities are not a match for

the full breadth of human intellectual and emotional faculties.

Here, the contribution of humans is paramount, with AI serving to enhance rather than replace core human functions, especially evident in fields such as healthcare, education, social work, and the arts. These domains rely heavily on human empathy, moral judgment and creative intuition, underscoring the unmatched value humans bring to high-stakes decision-making, creative endeavors, and roles requiring profound empathy.

For instance, in psychiatry, the nuanced interpretation of non-verbal communication, the provision of emotional support, and the application of seasoned judgment underscore the limitations of AI in replicating the complex empathetic and moral considerations inherent to human interaction. This perspective is bolstered by empirical evidence, reinforcing the critical importance of the human element across various landscapes.

Fusion mode

This segment illustrates a synergistic integration where human intelligence and AI coalesce to leverage their distinct strengths: human creativity and integrity traits paired with AI’s analytical acumen and pattern recognition capabilities.

It represents an innovative approach within forward-thinking organizations that strive for a unified strategy, optimizing the combined assets of both human and technological resources. In such environments, the amalgamation of human intuition and AI precision forms a powerful alliance, elevating task execution to unprecedented levels of efficiency. In this mode, AI amplifies human capabilities, making both indispensable for peak performance and enhanced decision-making. This cooperative dynamic yields benefits unattainable by either humans or AI alone.

Research across various domains validates this synergy. In health, for example, AI can augment physicians’ capabilities with precise diagnostic suggestions, and enhance surgical precision in medical procedures. In engineering and design, it can support creative problem-solving.

This vision suggests an ecosystem where

AI enriches human capabilities, promoting a synergy where human creativity, complexity and empathy are bolstered by AI’s efficiency, consistency and capacity for processing vast quantities of information.

Assessing AI and its potential impact

As AI becomes more integrated into our lives, a focus on artificial integrity will ensure that it is deployed in a manner that enriches human capabilities, respects human dignity, and contributes positively to society. Across all four modes, the call for artificial integrity emphasizes a holistic approach to AI development and deployment – one that considers not just the technical capabilities of AI but its impact on human and societal values. It is about building AI systems that not only know how to perform tasks, but also understand the broader implications of their actions in the human world.

Whether they are in business, the public sector, the nonprofit sector, or multilateral institutions, leaders aiming to pair the power of AI with an integrity-driven approach should use these four lenses to assess the purpose of the AI systems that they may develop and deploy.

The appropriate approach for leaders to adopt varies according to which of the four modes they are working within. Here are some of the central guiding posts for each.

In Marginal mode

Focus on optimizing AI to perform tasks that, while marginal, can significantly enhance operational efficiency without replacing human intelligence. This involves identifying areas where AI can provide the most value in automating routine and low-impact tasks, freeing humans to focus on more complex and creative endeavors.

Conduct thorough assessments to ensure the deployment of AI in marginal tasks justifies the investment, not only in financial terms but also in terms of societal impact, including job displacement and human considerations.

Embrace simplicity in AI design for marginal tasks, ensuring that systems are not overly complex for the problems they solve. This involves focusing on straightforward, reliable solutions that perform their intended functions without unnecessary elaboration.

In AI-first mode

Develop AI systems with built-in mechanisms for transparency and accountability, particularly in decision-making processes, to build trust among users and stakeholders. This includes clear documentation of AI decision pathways and the ability to audit and review AI actions.

Prioritize the assessment of social impacts, ensuring that the benefits of AI advancements are accessible to all and do not exacerbate existing inequalities. Implement integrity-driven AI frameworks that guide development and deployment, focusing on principles such as transparency, accountability, fairness, and respect for privacy, to ensure AI acts in ways that are consistent with human values.

Ensure that as AI solutions are scaled, they maintain their capacity to algorithmically act in ways that are integral, adhering to principles that uphold human values and respect human rights. This requires continuous monitoring and updating of AI systems to align with evolving societal values.

In Human-first mode

Design AI systems to complement and augment human intelligence, not to replace it. Focus on developing AI that supports human decision-making, creativity, and problem-solving, especially in fields where empathy and human judgment are paramount.

Maintain a high level of human control and autonomy by ensuring AI systems serve as tools for enhancement rather than control. This involves creating AI that respects human decisions and augments human abilities without undermining human agency.

Engage in collaborative development processes that include stakeholders from diverse backgrounds to ensure AI systems are culturally sensitive, developed with a focus on cultivating integrity with regards to authentic human necessities.

In Fusion mode

Aim for a seamless integration of human and AI capabilities where each complements the other’s strengths, leading to innovative solutions that neither could achieve alone.

This involves developing interdisciplinary teams that understand both technological and humanistic perspectives.

Foster environments and development approaches where both AI systems and humans engage in continuous learning and adaptation.

Ensure that the evolution of AI is guided by integrity-driven considerations that evolve in tandem with technological advancements. This involves establishing guidelines that are rooted in integrity, flexible and adaptable to new discoveries and societal changes. Such guidelines could include requirements for AI systems to undergo regular impact assessments that evaluate not only their immediate effects but also their long-term implications in various scenarios.

Integrity will shape our future

Just as with artificial intelligence, developing artificial integrity is a journey. It begins with a commitment to the principle that these machines should be developed and deployed to exhibit not only intelligence, but integrity – because the definition of value that businesses seek to create today determines that of the society in which we will live tomorrow.

Hamilton Mann is group vice president of digital marketing and digital transformation at Thales, and senior lecturer at INSEAD, HEC and EDHEC Business School.

Focus on optimizing AI to perform tasks that, while marginal, can significantly enhance operational efficiency without replacing human intelligence. This involves identifying areas where AI can provide the most value in automating routine and low-impact tasks, freeing humans to focus on more complex and creative endeavors.

Conduct thorough assessments to ensure the deployment of AI in marginal tasks justifies the investment, not only in financial terms but also in terms of societal impact, including job displacement and human considerations.

Embrace simplicity in AI design for marginal tasks, ensuring that systems are not overly complex for the problems they solve. This involves focusing on straightforward, reliable solutions that perform their intended functions without unnecessary elaboration.

In AI-first mode

Develop AI systems with built-in mechanisms for transparency and accountability, particularly in decision-making processes, to build trust among users and stakeholders. This includes clear documentation of AI decision pathways and the ability to audit and review AI actions.

Prioritize the assessment of social impacts, ensuring that the benefits of AI advancements are accessible to all and do not exacerbate existing inequalities. Implement integrity-driven AI frameworks that guide development and deployment, focusing on principles such as transparency, accountability, fairness, and respect for privacy, to ensure AI acts in ways that are consistent with human values.

Ensure that as AI solutions are scaled, they maintain their capacity to algorithmically act in ways that are integral, adhering to principles that uphold human values and respect human rights. This requires continuous monitoring and updating of AI systems to align with evolving societal values.

In Human-first mode

Design AI systems to complement and augment human intelligence, not to replace it. Focus on developing AI that supports human decision-making, creativity, and problem-solving, especially in fields where empathy and human judgment are paramount.

Maintain a high level of human control and autonomy by ensuring AI systems serve as tools for enhancement rather than control. This involves creating AI that respects human decisions and augments human abilities without undermining human agency.

Engage in collaborative development processes that include stakeholders from diverse backgrounds to ensure AI systems are culturally sensitive, developed with a focus on cultivating integrity with regards to authentic human necessities.

In Fusion mode

Aim for a seamless integration of human and AI capabilities where each complements the other’s strengths, leading to innovative solutions that neither could achieve alone.

This involves developing interdisciplinary teams that understand both technological and humanistic perspectives.

Foster environments and development approaches where both AI systems and humans engage in continuous learning and adaptation.

Ensure that the evolution of AI is guided by integrity-driven considerations that evolve in tandem with technological advancements. This involves establishing guidelines that are rooted in integrity, flexible and adaptable to new discoveries and societal changes. Such guidelines could include requirements for AI systems to undergo regular impact assessments that evaluate not only their immediate effects but also their long-term implications in various scenarios.

Integrity will shape our future

Just as with artificial intelligence, developing artificial integrity is a journey. It begins with a commitment to the principle that these machines should be developed and deployed to exhibit not only intelligence, but integrity – because the definition of value that businesses seek to create today determines that of the society in which we will live tomorrow.